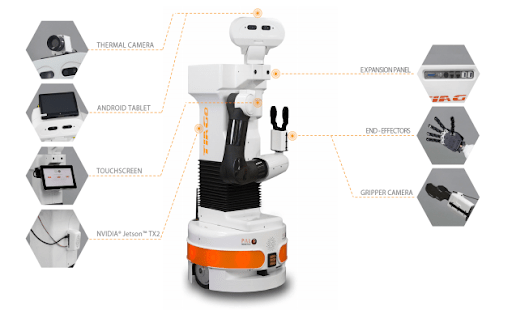

TIAGo Robot: the most versatile research platform for mobile manipulation

Whether your research platform needs include manipulation, navigation or perception, our versatile TIAGo platform (which stands for Take It And Go) is ready to adapt to meet your research needs and not the other way around. In this series of blogs on TIAGo for research, we start with using TIAGo for research focussed on manipulation. In case you missed our previous blog on Versatility of TIAGo as a research platform for navigation.

The robot is well suited for applications in the healthcare sector and light industry, specifically for its modular design, as demonstrated by the several European Union (EU) Projects that choose it as a research platform. TIAGo is a mobile manipulator suitable for research in areas such as Manipulation and Touch, Collaborative Robotics and Industry 4.0.

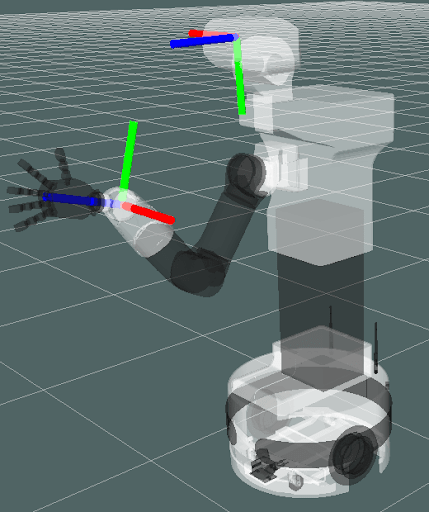

- In terms of manipulation, TIAGo’s arm has a large manipulation workspace with 7 degrees of freedom, being able to reach the ground as well as high shelves. The arm includes a Harmonic Drive and high quality torque sensors. The end effector can be a gripper or a humanoid hand and they can be quickly exchanged for performing various manipulation tasks.

- TIAGo has autonomous navigation and localization, mapping and obstacle avoidance all accessed through ROS.

- TIAGo includes perception abilities such as facial recognition, emotion recognition and people tracking.

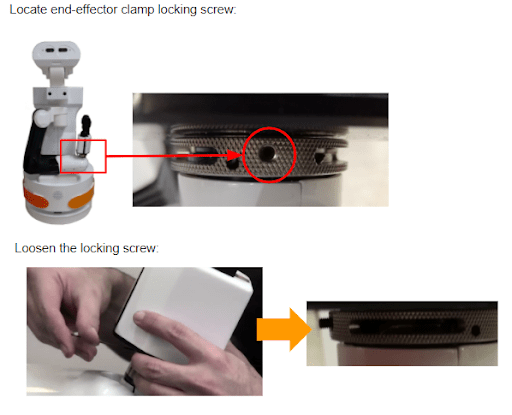

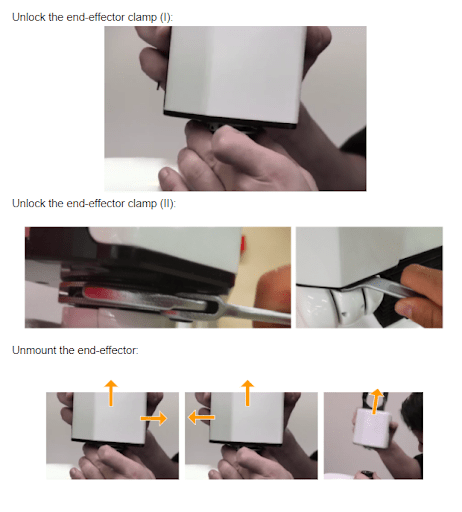

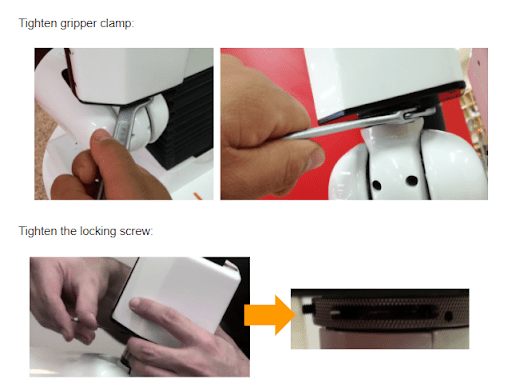

Along with the modularity of TIAGo, one of the most useful features is the ability to exchange the robot’s end effectors easily, between a Hey 5 hand (with fingers) or a gripper, for different types of manipulation tasks. Keep reading to learn how to do this.

Looking more specifically at manipulation, here are some of the manipulation features of TIAGo and how to use them.

Gripper control and grasping

The gripper is composed of two motors, each one controlling one of the fingers. The gripper can be opened and closed with the joystick.

The Service interface makes the gripper close the fingers until a grasp is detected. When that happens the controller keeps the fingers in the position reached at that moment in order to hold the object.

We are continuing to develop the force sensors in the finger tips. When these fingers are used the force measurements provided are used in the embedded grasp monitor to detect when a part is grasped.

Whole body control

Whole body control is available with TIAGo and its uses include imitating human body movements. Whole body control helps the robot to walk and maintain balance. In manipulation and grasping objects, whole body control helps in preventing self collisions.

- TIAGo is provided with a Stack able to control its upper body joints (torso + arm + head) through the following Tasks (from highest to lowest priority):

- Self-collision avoidance: to prevent the arm from colliding with any other part of the robot while moving

- Joint limit avoidance: to ensure joint limits are never reached

- Command the pose of /arm_7_link in cartesian space: to allow the end-effector to be sent to any spatial pose

- Command the pose of /xtion_optical_frame in cartesian space: to allow the robot to look towards any direction

Motion planning with MoveIt!

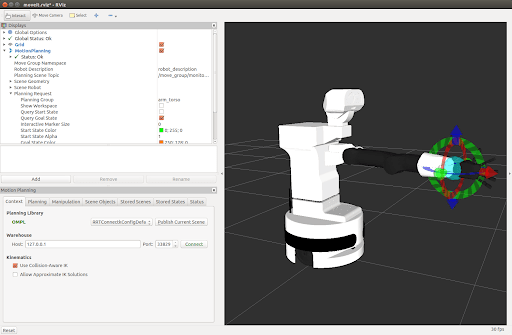

- MoveIt! is state of the art software for mobile manipulation.

- What does it provide for TIAGo?

- Framework for motion planning and trajectory smoothing

- ROS Action interface

- Collision checking

- Manage collision environment: add/remove (virtual) obstacles

- Plugins for motion planning and trajectory smoothing

- Change parameters of system

- Develop custom plugins/algorithms

- Tools to set up a robot configuration

- GUI by means of an RViz plugin

- Framework for motion planning and trajectory smoothing

Motion Planning GUI (I)

Plugin of RViz can be used with a real or simulated robot and supports visualization of plans without execution.

- Can be used with a real or simulated robot

- Supports visualization of plans without execution

- Select different planning groups

- On-the-fly change of planning library

Motion Planning GUI (II)

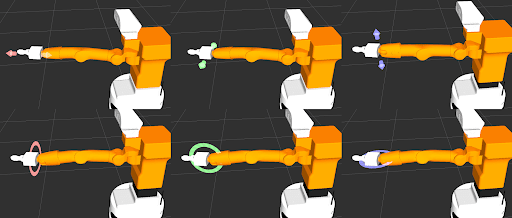

- To specify the end effector goal,

- drag the end effector

- along translational and

- along rotational axis

- in 3D

Motion Planning GUI (III)

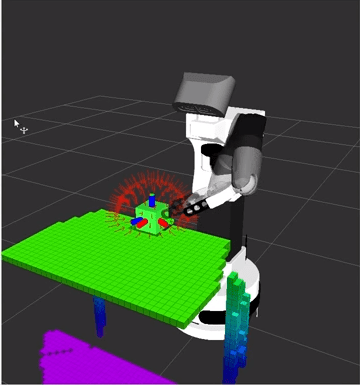

- When the goal state is not in collision, motion planning will generate trajectories that go around

- robot body parts and

- other objects of the collision environment. *

- Objects can be added via the GUI, the action API or a RGB-D sensor (point clouds)

Read the official documentation and tutorials here: