The versatility of the mobile manipular robot TIAGo

Whether your research platform needs include manipulation, navigation or perception, our versatile TIAGo platform (which stands for Take It And Go) is ready to adapt to meet your research needs and not the other way around. In this series of blogs on TIAGo for research, this is the third blog, which looks at the features TIAGo has for research focussed on perception.

The TIAGo robot is well suited for applications in the healthcare sector and light industry, specifically for its modular design, as demonstrated by the several European Union (EU) Projects that choose the robot as a research platform. TIAGo is a mobile manipulator suitable for research in areas including HRI, Collaborative Robotics and Deep Learning.

- TIAGo includes perception abilities such as facial recognition, emotion recognition and people tracking.

- TIAGo has autonomous navigation and localization, mapping and obstacle avoidance all accessed through ROS, you can read about it in our previous blog.

- In terms of manipulation, which we have covered in a previous blog, TIAGo’s arm has a large manipulation workspace, thanks to the lifting torso, being able to reach the ground as well as high shelves. The end effector can be either a gripper or a humanoid hand and they both can be quickly exchanged.

For robots to be able to plan, make decisions and operate, perception is crucial, including detecting people, objects and obstacles, recognising the environment, as well as more advanced features such as recognising voices and emotions.

Firstly, these are the sensors TIAGo uses to be able to help recognise the environment:

TIAGo’s sensors

- TIAGo is equipped with the following sensors:

- Mobile base:

- Laser range-finder

- Sonars

- IMU

- Head:

- RGB-D camera

- Stereo microphones

- Wrist:

- Force/Torque sensor

- Mobile base:

Laser range-finder

These three lasers are supported by the TIAGo robot:

The laser scans are published as sensor_msgs/LaserScan in the /scan topic

Laser range-finder graphical visualization

In the image below you can see how TIAGo robot sees things using the laser range-finder:

Sonars

The sonars allow TIAGo to perceive obstacles behind it, where the laser and camera can’t see. The robot is equipped with three rear ultrasonic sensors Devantech SRF05:

- Frequency – 40 kHz

- Measuring distance – 0.03 – 4 m*

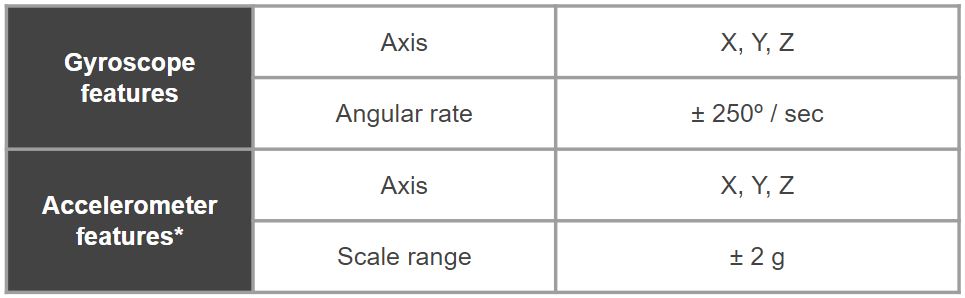

Inertial Measurement Unit (I)

TIAGo is equipped with a InvenSense 6-axis MPU-6050 IMU:

RGB-D camera

TIAGo features an Orbbec Astra RGB-D camera which you can see in the image below:

RGB image visualization

The RGB image can be visualized in rviz

export ROS_MASTER=https://tiago-0c:11311

rosrun rviz rviz -d `rospack find tiago_bringup`/config/tiago.rviz

Image subscription

ROS provides tutorials on how to write image subscribers:

Running simple image subscriber with a different transport

Image transports provide different ways to transmit images in ROS and these are the options:

- raw – images are not compressed. Useful when the subscriber runs in the same computer as the publisher.

- compressed – images are compressed using jpeg or png. Useful when the subscriber is running in a different computer than the publisher

- theora – useful to stream video

Point cloud processing

ROS and PCL provide tutorials on how to process point clouds:

ROS pcl tutorials

Stereo microphones

TIAGo is as well equipped with the following:

USB-SA Array Microphone stereo microphones

- which is recommended to be used 30.5-122 cm distance and it has acoustic signal reduction at 1 KHz Outside of 30º Beamform: 15-30 dB:

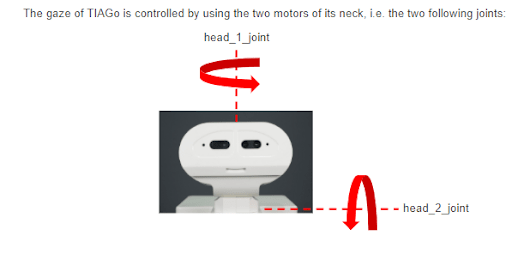

Controlling the robot gaze

The gaze of TIAGo is controlled by using the two motors of its neck, i.e. the two following joints:

The Node that provides an Action interface for pointing the head towards a given point in the space http://wiki.ros.org/head_action

Lastly, TIAGo is equipped with software to enable the robot to recognise faces and emotions.

Facial perception

- TIAGo is empowered with Verilook Face SDK providing:

- Face detection

- Face matching

- Gender classification

- Facial attributes: smile, open-mouth, closed-eyes, glasses and dark-glasses.

- Emotions recognition: confidences for six basic emotions:

- anger

- disgust

- fear

- happiness

- sadness

Teach TIAGo’s perception to recognise faces:

- First select the name of the database to use

- Start learning a new person’s face

- Place yourself in front of the robot for a while, moving your head slightly, and changing your facial expression

NVIDIA Jetson TX2 for Deep Learning

TIAGo has the NVIDIA Jetson TX2 add-on that offers the computational power for many perception algorithms based on Deep Learning. Jetson TX2 is a power-efficient embedded AI computing device that includes 8GB of memory. Furthermore, with the NVIDIA Jetson TX2 we provide a Google Tensorflow Object Detection system.

TIAGo is used in European research projects including Open DR (creating an open deep learning toolkit), SHAPES (deploying digital solutions to support healthy and independent living for older people), Evolved 5G (Network Applications being tested, validated, and certified), NeuTouch (improving artificial tactile systems and building robots that can help humans in daily tasks), SeCOIIA (secure digital transition of manufacturing processes), RobMoSys (building an open and multi-domain European robotics software ecosystem), SIMBIOTS (introduction of robotics in new industrial processes and applications) and recently in Co4Robots (for collaborative robots in industrial settings).

TIAGo has ROS tutorials available to get started with the robot’s open source simulation, which you can download online. To find out more about PAL Robotics and the different TIAGo customizations available including TIAGo Titanium, TIAGo Steel, TIAGo Iron and TIAGo ++, visit our website, you can also create your own TIAGo, using your chosen configurations, find out more here. Don’t hesitate to get in touch with our experts to discuss how TIAGo can meet your research needs.

Do not miss out our blog about robotics!